29 Palms Fixed/Mobile Experiment

Tracking vehicles with a UAV-delivered sensor network.

UC Berkeley and MLB Co

March 12-14

Marine Corps Air/Ground Combat Center (MCAGCC)

Twentynine Palms, CA

Goal

The goals of the experiment were to

-

Deploy a sensor network onto a road from an unmanned aerial vehicle (UAV).

-

Establish a time-synchronized multi-hop communication network among the

nodes on the ground.

-

Detect and track vehicles passing through the network.

-

Transfer vehicle track information from the ground network to the UAV.

-

Transfer vehicle track information from the UAV to an observer at the base

camp.

Details are below, or you can just skip to the results.

Background

People have been talking about combining MEMS sensors with wireless communication

for about a decade. The Smart

Dust effort at Berkeley is one of the many projects in this area.

As a part of Smart Dust, we implemented wireless sensor nodes using off-the-shelf

components in custom boards. The nodes, known as macro-motes or just

motes, are about a cubic inch in volume and contain sensors, a microprocessor,

RF comm., and a battery or solar cell for power. David Culler and

his students got interested in these platforms through our interaction

on the Endeavour

project, and quickly wrote an operating system called TinyOS.

A new family of modular nodes was designed by the TinyOS/Dust team, and

it was good. :)

Shankar Sastry and Kannan Ramchandran felt that there were interesting

theoretical issues to be studied in the operation of distributed sensor

networks, and wrote a proposal on Sensorwebs

which was ultimately funded (under SensIT) after Shankar had already left

for DARPA (to run ITO). Having been cajoled into being a co-PI on

Sensorwebs, I was a natural target when Sri Kumar, the SensIT PM, called

in June 2000 and asked for "a fixed/mobile demo" from our theoretical team!

Leveraging on the work done in Dust and Endeavour, and with some additional

funding from Sri, we pulled it off in 9 months.

Sensor Node Hardware

The sensor nodes consist of a motherboard (Rene), a sensor board, and a

power supply board. The motherboard has a microprocessor, 916.5 MHz

OOK radio, and support circuitry. The power supply board currently

sports just a battery connector. The sensor board has a 2 axis Honeywell

HMC1002 magnetometerwith

roughly 1mGauss resolution. All three boards and the lithium battery

weigh just under 1 ounce. The basic nodes can be purchased from Crossbow.

Battery life varies depending on what's powered up. With everything

on, the life is just an hour. With the magnetometer off, and the

radio turned on only once a second to check for messages, the lifetime

is many days.

The complete mote and two motes as attractively packaged for dropping

and two motes as attractively packaged for dropping .

.

The magnetometer board has an amplifier and a software-controlled output

nulling feature (to trim out the DC component of the earth's magnetic field

so that it doesn't saturate the amplifier/ADC). Magnetic materials

moving near the magnetometer cause a change in the earth's field, and this

change is what the motes detect. The magnetometer signal is sampled

at 5 Hz.

The motes are able to detect passenger vehicles at more than 5 meters,

and buses and trucks at more than 10 meters. We didn't do any range

experiments in the desert, but had no trouble tracking any of the vehicles

at distances of 10 meters and more. A typical magnetometer signal

is shown below.

The

green trace is the raw signal, yellow is low-pass filtered signal, and

red is high-pass filtered yellow. The vertical lines mark the start/stop

points of the mote's determination that a vehicle is present.

The

green trace is the raw signal, yellow is low-pass filtered signal, and

red is high-pass filtered yellow. The vertical lines mark the start/stop

points of the mote's determination that a vehicle is present.

Aircraft

The aircraft is a 5' wingspan fully autonomous GPS controlled pusher-prop

built by MLB Co. A custom mote-dropper

was built, including an integrated camera to view the motes as they are

dropped. The plane has a color video camera in the nose, and transmits

to an auto-tracking ground station from a distance of up to two miles.

The range of the aircraft as configured in the demo was 30 minutes, or

15 miles. Video range is currently 2 miles. Top speed is 60 mph.

These pictures are low-res versions of photos that were taken by the

UAV.

Two

views of the basecamp where the UAV was launched, and the VIPs watched

the show.

Two

views of the basecamp where the UAV was launched, and the VIPs watched

the show.

A

HMMWV and a Dragon Wagon, two of our typical targets near the intersection.

Jason and Steve prepare the mote dropper for a launch.

A

HMMWV and a Dragon Wagon, two of our typical targets near the intersection.

Jason and Steve prepare the mote dropper for a launch. The

motes know the order that they will be dropped in, and use that for an

initial guess at their relative locations, which they update as they get

more track information.

And we still had 80 bytes of program memory left!

The

motes know the order that they will be dropped in, and use that for an

initial guess at their relative locations, which they update as they get

more track information.

And we still had 80 bytes of program memory left!

Results

It worked!

Over the course of the three day experiment, just about everything that

could go wrong did go wrong, but ultimately we demonstrated everything

that we set out to demonstrate.

Monday March 12

-

The hand-emplaced network of 8 sensor nodes detected HMMWVs, LAVs, trucks,

dragon wagons, SUVs, etc. Anything that drove by was tracked, and

the network generated a direction and velocity estimate. We haven't

checked any ground-truth on the velocities, but all of the directions were

correct, and velocities matched our visual estimates.

-

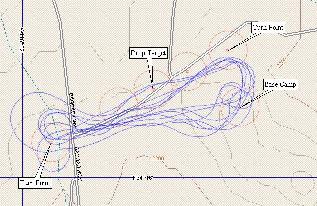

The UAV flew autonomously (course plot:

)

and dropped six motes from an altitude of 150 ft and a velocity of 30 mph.

The motes landed diagonally across the road on roughly 5 meter centers.

Perfect!

)

and dropped six motes from an altitude of 150 ft and a velocity of 30 mph.

The motes landed diagonally across the road on roughly 5 meter centers.

Perfect!

-

Unfortunately, the batteries in the dropped motes had died because of several

false starts in launching the UAV.

Software re-writes keep the TinyOS team up until 3am. Mote power

consumption drops by a factor of 2 with the new software!

Tuesday March 13

-

Everything goes wrong. We won't say anything more about tuesday.

Wednesday March 14

-

The UAV autonomously delivers 6 motes on 5 meter centers again. Motes

are 20 meters from the road, but in a perfect pattern.

-

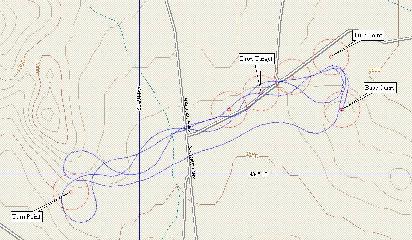

UAV course

and altitude.

Compare to the course plot above and you can tell that the winds were worse

on wednesday. Altitude is zero at basecamp, drops a bit when Steve

first throws the UAV from the top of the hill, and ends up at about -50

ft on landing near the intersection. "Bombing" runs are at 100 ft

elevation relative to the hilltop, 150ft from the intersection.

-

Here's the video from the mote drop as seen from the UAV

-

Once on the ground, the network of 6 motes synchronized their clocks and

waited for a vehicle to pass.

-

We had a dragon wagon drive "a little" off of the road (on the other side

of the berm next to the road).

-

The motes detected the dragon wagon, passed their "closest time of approach"

information around by multi-hop messaging, calculated the best least-squares

fit to the data, and stored it.

-

The UAV returned and transmitted a query to the ground network, which responded

with the track information: 4 fps at 11:31AM

-

The UAV flew over the base camp and transmitted the track information down

to a mote connected to a laptop. Success!

Other photos

Sri Kumar and Steve Morris; Kris Pister, Sri, and future motes; an

LAV and an M1 tank (photos from the summer 00 experiment) ; Rob Szewczyk

snoops on the ground network traffic with a couple of Majors.

The future

There is nothing in the current motes that can not be miniaturized.

In three years this demo will be done with a 6" aircraft, and millimeter-scale

sensor nodes.

Participants and Sponsors

David Culler, UCB; Co-PI Endeavour (ITO)

Lance Doherty; Sensorwebs/algorithms; ldoherty@eecs.berkeley.edu

Jason Hill; Endeavour/TinyOS software and mote hardware; jhill@eecs.berkeley.edu

Mike Holden, MLB; flight software; mike@spyplanes.com

Charlie Kiers; Marine liaison to DARPA; pmpax@nosc.mil

Sri Kumar; DARPA/ITO; PM SensIT; skumar@darpa.mil

Julius Kusuma; Sensorwebs/algorithms; kusuma@eecs.berkeley.edu

Steve Morris, MLB; PI sub contracts of Smart Dust and Sensorwebs; mlbco@sirius.com

Kris Pister, UCB; PI Smart Dust (MTO); Co-PI, Sensorwebs (ITO); pister@eecs.berkeley.edu

Kannan Ramchandran, UCB; Co-PI, Sensorwebs (ITO); kannanr@eecs.berkeley.edu

Brian Robbins, MLB; aircraft mechanical

Jean Scholtz; DARPA/ITO; PM Ubiquitous computing; jscholtz@darpa.mil

Mike Scott; Sensorwebs/mote hardware; mdscott@eecs.berkeley.edu

Rob Szewczyk; Endeavour/TinyOS; szewczyk@eecs.berkeley.edu

Bill Tang; DARPA/MTO; PM MEMS; wtang@darpa.mil

Alec Woo; Endeavour/TinyOS; awoo@eecs.berkeley.edu

.

.